AI-generated code has become a cornerstone of modern software development. Tools like GitHub Copilot and AWS CodeWhisperer allow teams to automate repetitive tasks, reduce debugging cycles, and release features faster than ever before.

But speed without safeguards comes at a cost. Hidden vulnerabilities, compliance blind spots, and insecure coding practices often ride along with AI-driven automation. Without systematic testing and compliance guardrails, organizations risk turning efficiency gains into security liabilities.

Why AI-Generated Code Accelerates Development

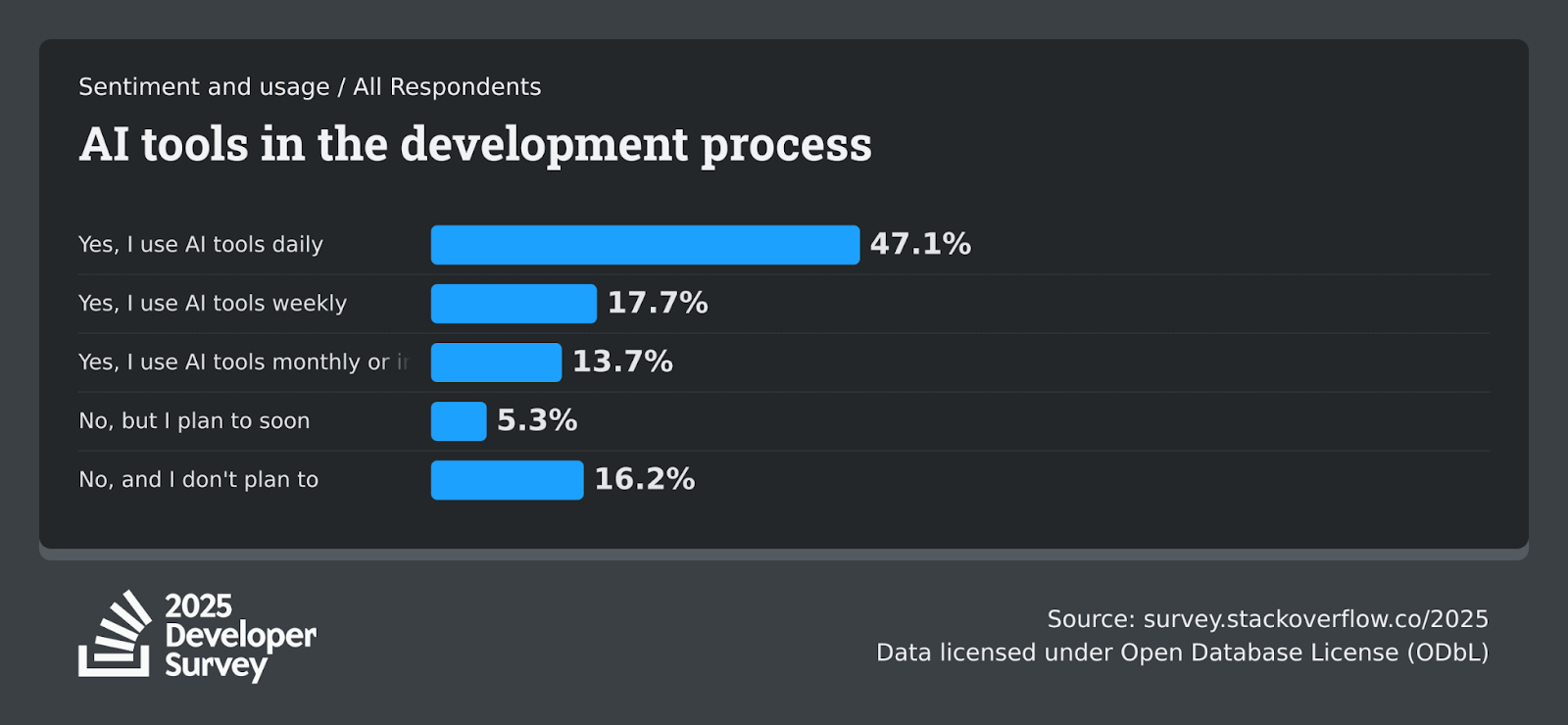

The rise of AI-generated code is changing how software is built, not only for individual developers but also for entire enterprises. What began as an optional productivity tool is now becoming a standard practice. The 2025 Stack Overflow Developer Survey found that 47.1% of developers use AI tools daily, with another 17.7% relying on them weekly.

This level of adoption underscores that AI-generated code has shifted from experiment to everyday necessity.

Daily adoption of AI tools by developers (Source: Stack Overflow 2025 Developer Survey).

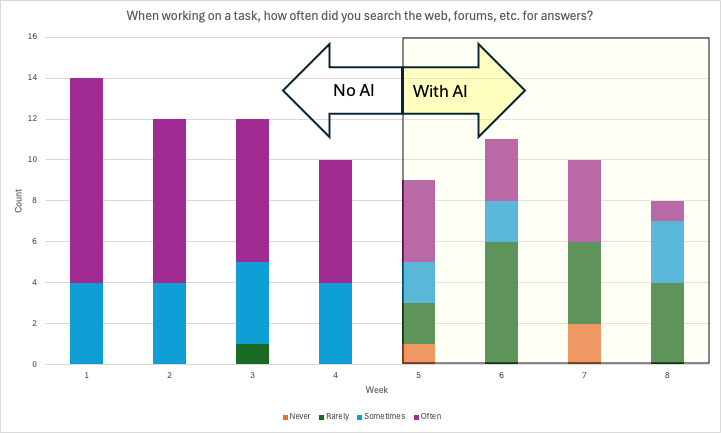

The biggest driver of adoption is productivity. Developers no longer need to spend hours writing boilerplate code or searching for syntax corrections. Instead, AI assistants generate functions, suggest fixes, and automate routine work. An eight-week productivity experiment illustrates this impact: once AI was introduced into workflows, the frequency of external searches on forums dropped by nearly 50%, as assistants provided immediate solutions. This reduction in friction translates directly into faster task completion and fewer interruptions during development.

Forum search frequency decreases once developers adopt AI assistants (Source: internal productivity study).

For enterprises, these efficiency gains translate into business value. Faster development means shorter release cycles, enabling organizations to deliver new features and updates more rapidly. In industries like fintech or SaaS, where time-to-market is a competitive differentiator, the ability to accelerate rollouts can shape market position. At the same time, AI-generated code frees senior engineers from repetitive coding, allowing them to focus on higher-order tasks such as system architecture, integration, and innovation. This shift in how talent is utilized strengthens an organization’s ability to scale.

Smaller teams also benefit. By multiplying developer output without proportional headcount increases, AI tools act as a force multiplier, enabling startups to compete with larger firms in terms of delivery speed. Larger enterprises, on the other hand, use AI-generated code to scale capacity without escalating labor costs.

Critically, speed isn’t a substitute for rigor. As outlined in this related deep-dive Why Most AI-Generated Code Fails Without Professional Testing faster output still requires systematic verification to avoid shipping hidden defects or compliance gaps.

In short, the acceleration enabled by AI-generated code is real, measurable, and strategically important. Yet, these gains come with a trade-off: the same tools that save time can also introduce vulnerabilities and compliance blind spots. Understanding these risks is the next critical step.

Security and Compliance Risks in AI-Generated Code

The efficiency of AI-generated code often conceals a growing set of risks. These risks extend beyond technical flaws into regulatory, financial, and governance dimensions making this the most pressing challenge enterprises face when adopting AI-driven development.

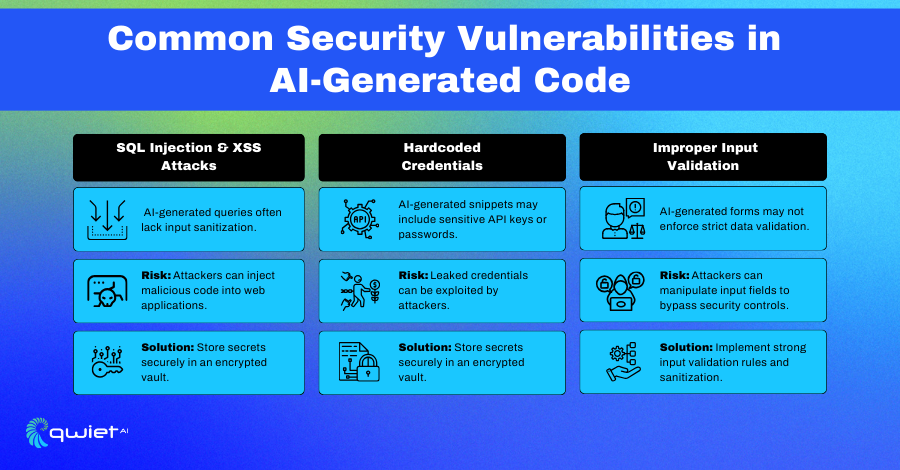

The most critical security concerns include:

Hardcoded credentials: AI often generates code embedding API keys or passwords directly into files, creating an immediate attack surface.

Improper input validation: Inadequate sanitization leaves systems exposed to SQL injection and XSS, two of the most common exploits in web applications.

Common security vulnerabilities in AI-generated code, including SQL injection, hardcoded credentials, and improper input validation (Source: Quiet AI).

Other vulnerabilities emerge in more subtle ways:

Hidden patterns from training data can reintroduce outdated logic or unsafe libraries.

Logic flaws may pass basic testing but create exploitable weaknesses under real-world loads.

Traceability gaps make it unclear who human or AI authored code, complicating accountability and audits.

Compliance blind spots magnify the risk. AI-generated code does not “understand” laws such as GDPR, HIPAA, or the EU AI Act. As a result, applications may inadvertently mishandle sensitive data or rely on non-compliant practices. For industries like finance or healthcare, this is not just a technical risk but a legal and reputational one.

The financial stakes are high. IBM’s 2024 Cost of a Data Breach Report found that post-release vulnerabilities cost up to 30 times more to fix than those caught in development. With AI accelerating output, flaws can move into production faster than ever, amplifying costs and multiplying exposure.

Taken together, these issues show that AI-generated code cannot be treated as “faster traditional coding.” It introduces a new class of vulnerabilities and compliance challenges that require proactive management. Without clear guardrails, enterprises risk trading efficiency for instability which is why the next section focuses on the safeguards that make AI-generated code enterprise-ready.

Making AI-Generated Code Secure and Enterprise-Ready

Enterprises adopting AI-generated code cannot rely on speed alone. The same features that accelerate delivery instant boilerplate generation, automated bug fixes, rapid prototyping also increase the chance that vulnerabilities and compliance failures slip through undetected. Without stronger guardrails, organizations risk trading short-term efficiency for long-term instability.

1. Shift-left testing must be non-negotiable

Security has to move upstream, starting at the earliest development stages. Automated scans and static code analysis catch injection flaws, credential leaks, and dependency issues before deployment. But automation alone isn’t enough. Many flaws in AI-generated code are subtle like unsafe error handling or misconfigured authentication flows and only human review can spot them. Embedding both automated and manual testing directly into CI/CD pipelines turns security from a bottleneck into a proactive safeguard.

2. Compliance validation needs to be systematic, not ad hoc

AI systems are not aware of GDPR, HIPAA, or the EU AI Act. That leaves enterprises exposed if compliance is only checked at the end. Continuous validation should verify whether generated code respects data residency rules, applies correct encryption standards, and avoids libraries that fall short of regulatory requirements. Without this, even high-performing applications can fail audits and trigger penalties.

3. Risk management requires more than patching issues

Enterprises should treat AI-generated code as a new asset class that demands governance. Three priorities stand out:

Governance: Clear policies defining when AI-generated code requires additional review, how it is logged, and who approves it.

Monitoring and auditability: Tracking code provenance to ensure accountability and to support post-incident investigation.

Independent assurance: Professional QA and security testing services that provide objective validation of both code security and compliance.

4. Why professional QA & Security Testing is decisive

Internal teams and automation can only go so far. Penetration tests reveal how a leaked API key could be exploited; compliance audits confirm whether workflows truly meet GDPR or HIPAA standards; code reviews uncover logic flaws that automated scans miss. This level of assurance requires structured methodologies and domain expertise.

Firms with both technical and regulatory depth, such as Twendee, deliver this assurance. By combining automated vulnerability detection with manual security testing and compliance validation, they enable enterprises to adopt AI-generated code with the same confidence they expect from traditional software fast, secure, and compliant by design.

Conclusion

AI-generated code is a powerful accelerator, but speed alone is not enough. Without guardrails, it multiplies vulnerabilities, compliance failures, and long-term costs. The real value emerges when security testing, compliance validation, and governance are built into the process.

Enterprises that adopt this mindset move fast and stay protected. Strong QA and security testing turn AI-generated code from a risky shortcut into a reliable enterprise asset.

The conversation around AI-generated code is only beginning. As security standards, compliance rules, and development practices evolve, staying ahead will define which enterprises scale responsibly.

Follow Twendee and Facebook for insights, or visit twendeesoft.com to explore how our team tracks these changes and translates them into practical solutions.