As enterprises scale their AI adoption, one quiet threat keeps eroding budgets and efficiency AI sprawl. The proliferation of disconnected models, redundant tools, and siloed experiments is creating a maze that drains resources and confuses teams.

According to Forbes, AI sprawl has become one of the top financial blind spots in enterprise tech, with organizations running dozens of overlapping models that inflate maintenance and infrastructure costs. What was once innovation has turned into inefficiency.

The solution isn’t to slow down AI, it’s to govern it strategically.

1. Build Enterprise AI Governance That Unifies the Vision

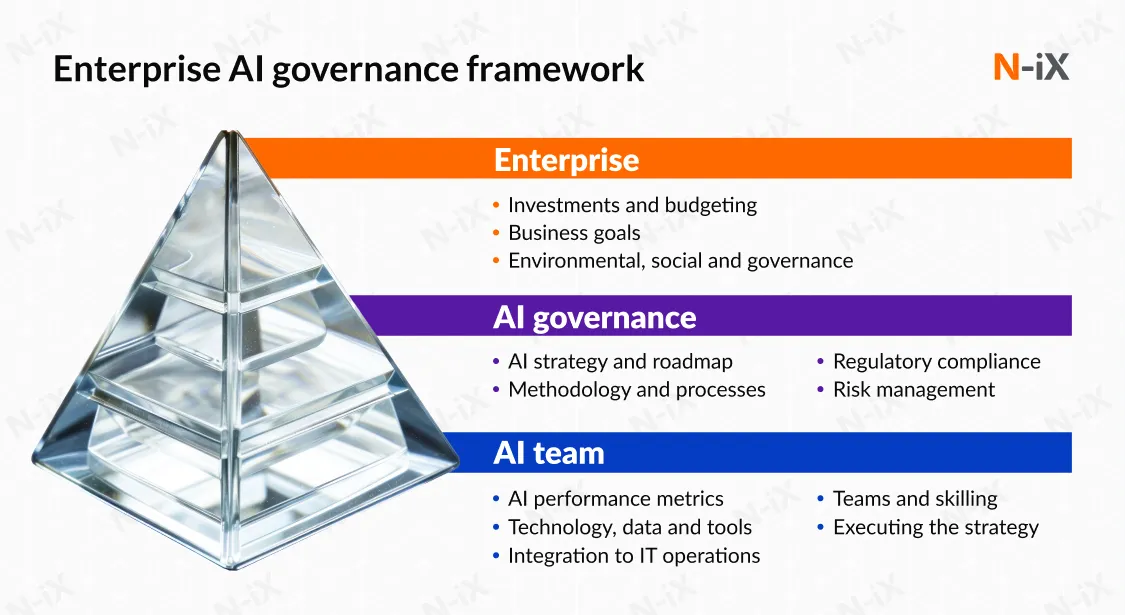

AI sprawl doesn’t begin with technology, it begins with a lack of unified governance. When every department builds or deploys models in isolation, the result is fragmented systems, inconsistent standards, and rising operational costs. What enterprises need is not more AI, but a structured AI governance framework that connects strategy, compliance, and execution.

Enterprise AI governance framework connects strategy, compliance, and execution aligning technical AI operations with broader business goals. (Source: N-iX)

Effective governance establishes a single source of truth for all AI activities defining which tools can be adopted, how models are validated, and who is accountable for performance and risk. This clarity ensures every AI initiative advances enterprise objectives rather than competing for isolated wins.

A mature governance system typically includes:

A cross-functional AI council: aligning IT, compliance, data, and business leaders to define principles, ROI metrics, and approval workflows.

Standardized development and documentation processes: enforcing uniform coding, testing, and evaluation standards to prevent inconsistency.

Automated policy enforcement: embedding compliance validation into ML pipelines to identify risks before deployment.

Continuous oversight mechanisms: integrating audit trails, access controls, and performance dashboards for model transparency.

When executed properly, governance becomes a strategic accelerator, not a bottleneck. It brings accountability to innovation, allowing enterprises to scale AI confidently while maintaining financial and regulatory discipline.

Twendee helps enterprises implement end-to-end AI governance frameworks that embed automation and traceability throughout the model lifecycle reducing redundant costs, ensuring compliance, and building the foundation for long-term scalability.

2. Audit and Consolidate the AI Landscape

Most enterprises underestimate how much duplication already exists within their AI ecosystem. Over time, teams experiment independently, choosing their own platforms, data sources, and APIs until the organization ends up managing dozens of disconnected systems that quietly drain operational budgets.

According to ClickUp’s analysis, large companies now use 40 or more AI tools on average, but fewer than half are actively integrated or monitored. Each of these tools carries its own licensing fees, infrastructure needs, and maintenance cycles — creating a cumulative cost that grows silently with every new initiative.

A well-structured AI audit should aim to uncover:

Redundant models performing similar tasks in different departments.

Unmonitored pipelines that continue to consume compute power without measurable ROI.

Overlapping vendor contracts that inflate licensing and support costs.

Underutilized infrastructure running at scale even when model usage declines.

Once identified, consolidation becomes the logical next step. By migrating workloads to fewer, more scalable AI platforms, organizations can centralize model management and streamline version control. Consolidation also improves visibility making it easier to enforce governance policies, optimize performance, and identify models worth retraining versus retiring.

From a financial perspective, enterprises that adopt a consolidation-first strategy typically see cost reductions of 30–40%, not just through lower compute expenses, but also by cutting redundant human oversight. More importantly, consolidation transforms AI operations from an experimental collection of tools into a coherent, governed ecosystem that scales efficiently.

3. Implement Model Lifecycle Management to Prevent Waste

Even with strong governance and consolidation, AI inefficiency often reappears in model maintenance. Many organizations unknowingly allow outdated or underperforming models to remain in production long after they’ve lost relevance. These silent inefficiencies inflate compute costs, introduce data drift, and undermine trust in model outputs quietly eroding the very ROI that AI promised to deliver.

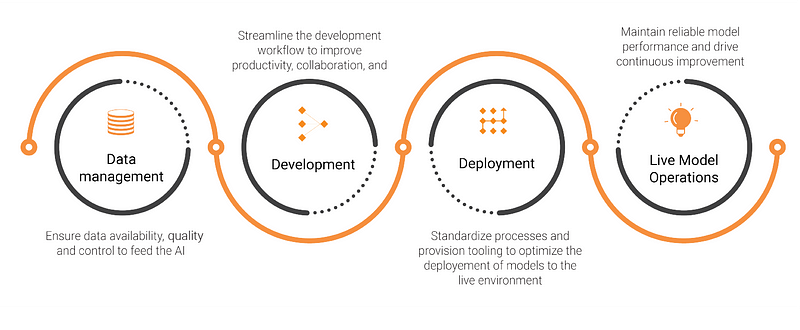

Model Lifecycle Management (MLM) provides a structured way to maintain visibility and control over every model from development through retirement. A mature lifecycle framework ensures models remain accurate, compliant, and cost-effective throughout their operation. It typically includes:

Automated version control to track changes and dependencies across the portfolio.

Performance validation pipelines that test models against live data and alert teams to drift or degradation.

Retirement and archiving workflows that decommission models once their value declines.

Centralized dashboards offering real-time visibility into model performance, governance status, and audit history.

This continuous feedback loop turns AI maintenance from a reactive exercise into a proactive system of optimization. Instead of firefighting performance issues, enterprises gain insight into which models drive impact and which quietly drain resources.

But lifecycle discipline is also a matter of security and compliance. As AI pipelines become increasingly automated, weak oversight can lead to untraceable model behavior, data leakage, or regulatory violations. Enterprises are now recognizing that efficiency must be paired with governance. As discussed in AI-Generated Code Is Fast—But It Requires Stronger Security & Compliance Guardrails, scaling AI responsibly means integrating guardrails that enforce consistency, accountability, and ethical use at every stage of deployment.

AI model lifecycle stages from data management to live operations, showing continuous improvement and retraining (Source: ML6)

By embedding lifecycle management within a governed infrastructure, organizations eliminate redundancy, maintain compliance, and build an AI environment where every model is traceable, measurable, and strategically aligned with business outcomes.

4. Standardize Data Infrastructure to Cut Hidden Costs

AI performance depends on the quality and accessibility of the data it consumes yet for many enterprises, data remains the most fragmented and expensive layer of their AI ecosystem. Multiple teams maintain separate data lakes, use inconsistent labeling methods, and duplicate storage across clouds, creating silos that quietly inflate operational spending.

According to The New Stack, up to 60% of enterprise AI costs stem from data-related inefficiencies: fragmented architecture, redundant pipelines, and poor data governance. Without a unified infrastructure, every new model effectively starts from scratch, forcing teams to repeat integration, cleansing, and validation work.

The solution lies in standardizing data architecture to create an interoperable foundation where models can scale and share context seamlessly. This involves:

Unified metadata and labeling schemas so models across departments can interpret data consistently.

Centralized data repositories whether a lake, warehouse, or hybrid that act as the single source of truth.

Interoperable APIs and connectors to ensure smooth data flow between AI, analytics, and operational systems.

Automated governance rules embedded into pipelines to enforce compliance and maintain audit readiness.

Standardization doesn’t just cut storage and processing cost, it strengthens traceability, security, and explainability. When systems share a common data language, enterprises can retrain models faster, deploy updates confidently, and maintain compliance with less manual oversight.

Twendee helps organizations achieve this by designing unified data architectures and automated governance frameworks that connect fragmented pipelines into a cohesive ecosystem. The result is greater visibility, lower maintenance costs, and a foundation built for scalable AI growth.

5. Focus on Strategic Implementation and Scalability

Avoiding AI sprawl isn’t just a technical task, it’s a strategic mindset. The most successful enterprises approach AI with a clear framework for scalability rather than a race to deploy more models. Each new system must serve a measurable business purpose and integrate seamlessly with the existing ecosystem.

Strategic implementation begins by asking critical questions early:

What specific process or metric will this model improve?

How will it integrate with existing data and workflows?

What level of ROI justifies ongoing compute and maintenance costs?

AI initiatives built around these principles deliver consistent value because they expand intelligently not exponentially. Scalability is about reusability, interoperability, and governance, ensuring that every new deployment strengthens the overall ecosystem rather than fragmenting it.

Automation also plays a defining role. By embedding monitoring, compliance, and retraining workflows into the deployment process, organizations can scale AI confidently without multiplying oversight. When governance and scalability evolve together, enterprises move from managing complexity to mastering control, a transition that turns AI from a cost center into a competitive advantage.

Conclusion

AI sprawl isn’t a technology problem, it’s a discipline problem. When organizations build faster than they govern, innovation becomes noise. Real efficiency comes from integration: one vision, one framework, one accountable system of intelligence.

Enterprises that bring AI under strategic governance don’t just reduce costs they gain the clarity to innovate with purpose, measure impact precisely, and scale with confidence.

Discover how Twendee helps leading teams build scalable AI foundations, and stay connected via Facebook, X, and LinkedIn.