Modern AI scaled in the cloud, where elastic compute accelerated model development and experimentation. But as AI now powers regulated workflows from financial screening to clinical imaging and industrial control enterprises are recalibrating.

The shift in 2025 is not a retreat from cloud, but a move toward hybrid intelligence: sensitive inference and proprietary knowledge kept inside the perimeter, while scalable training continues externally. AI maturity now means placing compute where security, compliance, and operational criticality demand it increasingly, within enterprise-controlled infrastructure.

1. Financial Fraud & AML Intelligence

Financial institutions are returning fraud and compliance AI to on-prem environments because AI now sits inside core risk and regulatory engines rather than analytics dashboards. In 2025, fraud prevention extends beyond transaction anomaly scoring into synthetic identity detection, behavioral network mapping, sanctions screening, document authentication, and continuous KYC monitoring domains where data exposure, inference failure, or governance gaps carry regulatory and reputational consequences. As payments accelerate and regulatory frameworks tighten, banks require full control over data flow, inference logs, and model behavior.

Why this trends on-prem:

Real-time payment rails reduce latency tolerance for fraud decisions

Cross-border cloud paths trigger PSD2, PCI-DSS, GDPR, and AI Act obligations

Fraud models rely on direct access to identity graphs, ledgers, and audit logs

Regulators expect traceable, explainable, and locally governed inference

Banks protect fraud models as defensive IP, avoiding shared cloud risks

Strategic shift: Fraud and AML models are becoming sovereign trust infrastructure in finance. On-prem deployment ensures deterministic performance, private model custody, immutable audit control, and data residency alignment making it a compliance-driven architectural decision, not a nostalgic one.

On-prem AI strengthens financial risk systems by keeping sensitive identity and transaction intelligence inside governed infrastructure while supporting real-time decisions. (Source: Invensis Learning)

2. Clinical AI at Point of Care

Healthcare systems are deploying diagnostic and triage AI inside hospital networks as medical models shift from research tools to direct clinical actors. Radiology suites, emergency departments, and pathology labs increasingly rely on AI to prioritize scans, detect anomalies, and support clinical evaluation in real time. In this context, trust, patient safety, and regulatory certainty define the deployment path not the convenience of elastic compute.

Cloud-based inference introduces unacceptable ambiguity around PHI exposure, auditability, latency risk, and legal accountability. With the EU AI Act designating medical AI as high-risk infrastructure, and HIPAA enforcement tightening globally, hospitals need local logging, explainable inference, and uninterrupted availability. Clinical systems also require predictable response windows: MRI flagging, pulmonary embolism detection, or trauma CT triage cannot rely on variable cloud routes.

AI vendors are reflecting this shift by shipping edge inference appliances, FDA-cleared radiology models, and privacy-preserving imaging accelerators optimized for PACS, RIS, and EMR environments. Clinical AI is now designed to live beside imaging hardware and care workflows, not on remote GPU clusters.

Why this accelerates on-prem adoption

Real-time imaging and ER triage require deterministic latency

Patient data sovereignty mandates local inference and audit trails

Regulation enforces traceability, transparency, and clinician oversight

Hospitals prioritize availability, cybersecurity isolation, and local failover

Clinical models now ship with on-prem deployment as default

Strategic shift: Medical AI is becoming in-hospital intelligence, not cloud-hosted automation. Reliability, privacy, and liability anchor it at the point of care, where decisions directly shape patient outcomes and legal exposure.

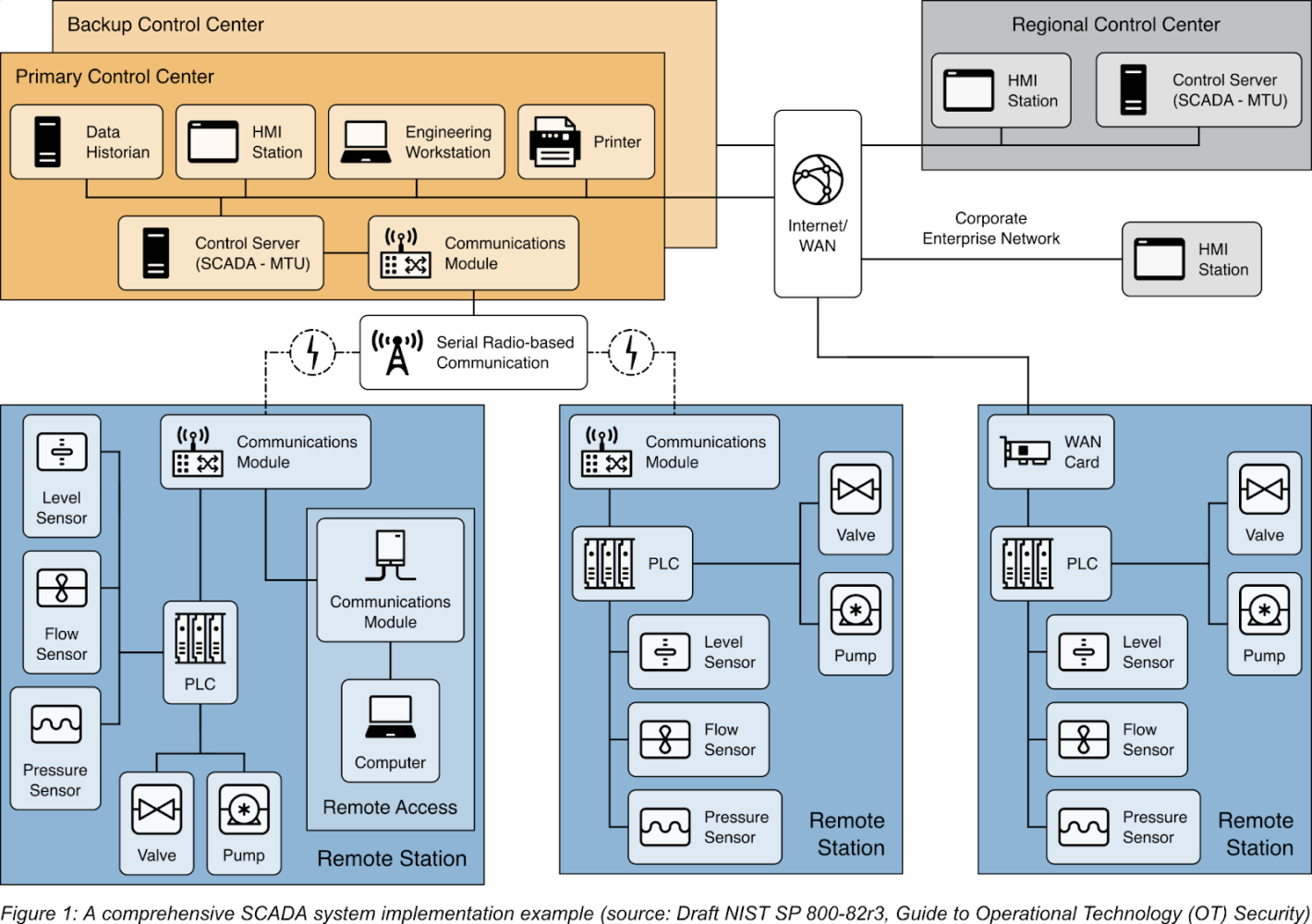

3. Industrial AI at Operational Edge

Industrial leaders are placing AI inside plants, grids, and factory lines as models move from monitoring to real-time control in robotics, turbine operations, energy systems, and logistics flows. When a misprediction can stop a conveyor, damage a compressor, or trigger a safety shutdown, AI must run beside the machines it governs, not in a distant datacenter. OT telemetry vibration signatures, actuator commands, process logic is some of the most sensitive industrial IP. Modern industrial stacks now pair rugged gateways and on-prem accelerators to execute inference inside secured operational networks.

Why this accelerates on-prem adoption:

Real-time loops require deterministic, low-latency inference

OT data and PLC logic are strategic industrial IP

Critical systems demand isolation, traceability, compliance

Infrastructure resilience regs (NIST ICS, IEC 62443, EU NIS2)

Edge hardware now supports full AI pipelines onsite

Strategic shift: Industrial AI is becoming machine-level intelligence, anchored in physical infrastructure where uptime, safety, and proprietary process logic shape deployment inside secured OT environments. Enterprise engineering teams increasingly partner with firms like Twendee to architect hybrid OT–AI systems that preserve operational resilience while satisfying internal security controls and regulated-industry compliance requirements.

OT architecture with SCADA, PLCs, and edge stations, illustrating on-premise intelligence and secure, real-time industrial decision environments. (Source: SEQRED)

4. Healthcare AI Under Clinical Governance

Healthcare providers are shifting core AI systems on-premise as models increasingly influence diagnostic triage, radiology workflows, oncology planning, and ICU alerts. When AI informs clinical judgment, the environment becomes regulated medical infrastructure, not analytics. PHI, genomic scans, and imaging archives form identity-rich datasets, and oversight bodies now expect provable isolation, auditability, and deterministic model behavior. Hospitals also require sub-second inference for stroke detection, cardiac monitoring, and surgical robotics latency budgets cloud routing cannot reliably meet.

Why this accelerates on-prem adoption:

PHI, imaging, and genomics mandate regulated data residency

Clinical outputs trigger medical liability & FDA audit trails

Real-time triage needs sub-second inference

Providers must prove explainability & model-version control

Shared cloud risk PHI co-mingling in pipelines

Strategic shift: Healthcare AI is becoming regulated clinical instrumentation, pushing inference to hospital-controlled compute where governance, patient safety, and real-world accountability converge.

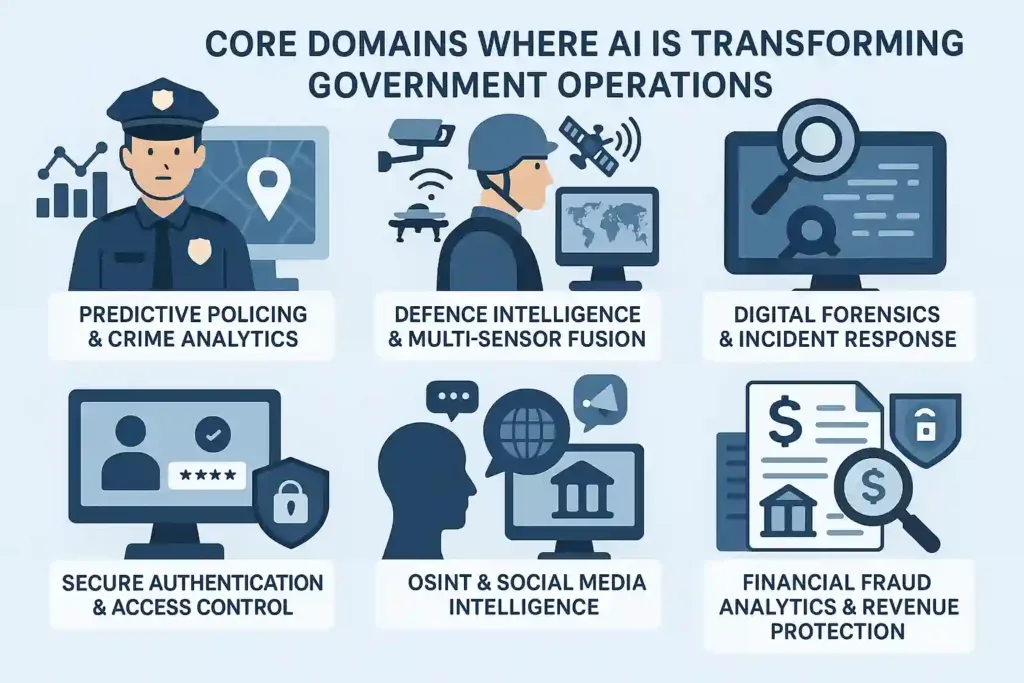

5. Public-Sector AI for National Security and Governance

Governments are moving mission-critical AI workloads on-premise as public institutions automate intelligence analysis, investigations, identity systems, and critical-infrastructure monitoring. In these environments, AI outputs are not just advisory, they influence public safety, legal decisions, cross-border controls, and military readiness. That level of consequence demands sovereign compute, strict auditability, and zero reliance on external cloud environments.

From predictive policing and counter-terror intelligence to digital identity verification and fiscal fraud detection, agencies increasingly require real-time inference within secured government networks. Data localization laws, national AI strategies, and cyber-defense doctrines (EU AI Act, NIST AI RMF, India DPI, UAE Sovereign Cloud) reinforce a shift toward government-controlled compute, protected communications, and air-gapped inference pipelines.

Why this accelerates on-prem adoption

National-security data cannot transit external clouds

Audit trails and explainability required for legal accountability

Identity systems & biometric data governed by sovereign-data rule

Multi-agency intelligence fusion demands secure shared environments

Political risk in vendor lock-in and foreign-cloud dependency

Strategic shift: Public-sector AI is evolving into sovereign digital infrastructure. For governments, on-prem AI is not a deployment choice, it is a state-capacity mandate where trust, control, and national resilience outweigh convenience.

Government AI applications across policing, identity systems, forensics, and national security. (Source: Innefu Labs)

6. Enterprise Knowledge Intelligence for Protected IP & Sensitive R&D

Enterprises are increasingly deploying knowledge-graph models, vector search, and RAG systems on-prem to safeguard proprietary research, engineering data, and long-cycle innovation assets. As generative AI embeds into regulated product development and technical decision-making, firms cannot risk prompt leakage, inadvertent model training on proprietary datasets, or exposure of confidential documentation to shared-cloud inference environments a concern reinforced by real-world incidents of model leakage and shadow training pipelines, highlighted in Twendee’s analysis on secure AI engineering.

Industries with deep R&D pipelines pharmaceuticals, semiconductors, automotive, aerospace, and energy are prioritizing sovereign knowledge AI that runs within their own trust boundary. These systems index lab data, simulation outputs, engineering logs, IP filings, and regulatory documents, ensuring deterministic retrieval and eliminating leakage risk into public LLM ecosystems.

Why this accelerates on-prem adoption

IP-dense R&D data must not enter external model training corpora

Retrieval systems require deterministic traceability across internal sources

Enterprise compliance demands controlled access and federated permissions

Shared cloud models risk knowledge spillover and competitive exposure

Strategic shift: Knowledge AI is moving from “assistive search” to secure enterprise intelligence infrastructure. Forward-thinking organizations partner with engineering-first firms such as Twendee to architect hybrid AI stacks enabling high-performance retrieval and reasoning while preserving IP control and maintaining audit-grade internal governance.

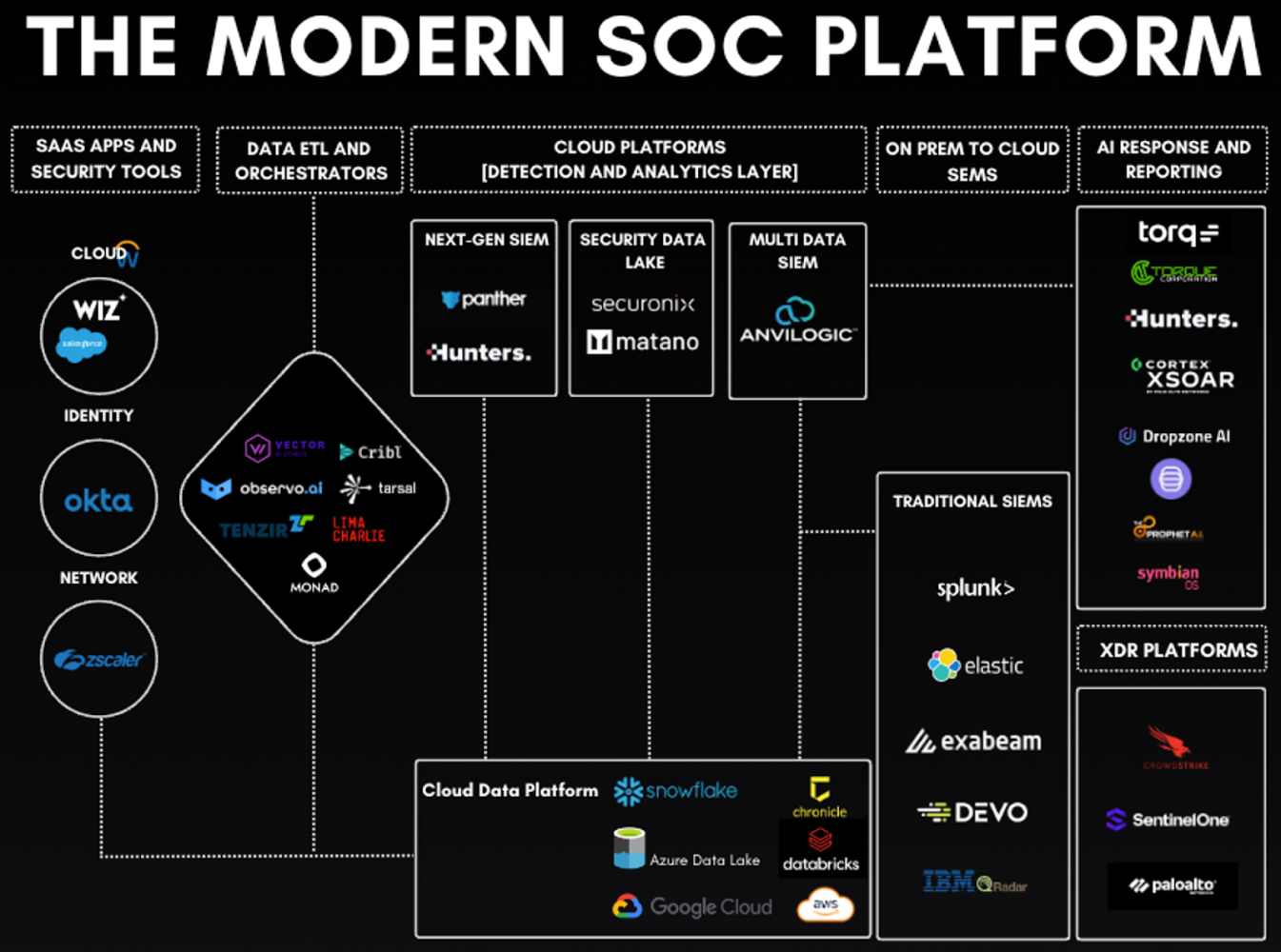

7. Cyber and Identity Defense AI Inside Enterprise Perimeters

Security operations teams are bringing AI models on-prem to protect identity systems, authentication flows, threat telemetry, and privileged access. Modern attacks lateral movement, compromised credentials, API abuse, insider signals evolve in real time. These patterns cannot depend on shared-tenant inference or unpredictable cloud latency. As identity becomes the primary attack surface, enterprises are embedding AI directly inside zero-trust perimeters, where session risk scoring, anomaly detection, and privilege monitoring require full control over model logic and audit trails.

Why this accelerates on-prem adoption:

Identity telemetry and user graph data are now crown-jewel assets

Real-time access decisions require local inference and sub-second latency

Internal user data cannot enter shared-model environments

MFA, SSO, API tokens, and session logs demand verifiable audit and governance

SOCs need model explainability for regulatory investigation and forensics

Strategic shift: Cybersecurity AI is becoming identity-centric infrastructure embedded at the trust boundary. Enterprises keep threat-detection and access-intelligence models on-prem to ensure sovereign control over authentication systems, auditability, and internal trust assurance.

Zero-trust SOC architecture showing identity platforms, hybrid SIEM layers, and on-prem security analytics pipelines. (Source: Software Analyst Cyber Research)

8. Regulatory-Driven AI Governance and Compliance Enforcement

As AI becomes embedded in regulated workflows, enterprises are deploying governance, monitoring, and model-audit systems on-prem to ensure verifiable compliance. New regulatory regimes EU AI Act, FDA AI/ML device guidance, PCI DSS 4.0, SEC cybersecurity rules, MAS AI governance, and NIST AI RMF demand auditable logs, explainable inference, controlled model updates, and provable data lineage.

Unlike cloud-hosted analytics tools, compliance AI must integrate directly with internal systems: access logs, case files, audit trails, and sensitive operational data. On-prem deployments provide guarantees of evidence integrity, deterministic policy enforcement, and protected model telemetry ensuring no compliance artifact leaves enterprise boundaries.

Why this accelerates on-prem adoption

Audit systems must maintain tamper-proof local logs

Regulators require provenance, explainability, and version controls

Policy enforcement engines need direct system access

Security and privacy controls cannot rely on shared cloud trust layers

Rising fines make internal control and provability essential

Strategic shift: AI compliance has evolved from policy paperwork to runtime governance infrastructure. Enterprises deploy governance AI inside protected environments to secure audit chains, prove fairness and safety, and enforce internal accountability at machine speed.

Conclusion

The return of on-premise AI is not nostalgia, it is a recognition that some workloads demand absolute control. Cloud still accelerates experimentation and scale, but regulated inference, sensitive data, and mission-critical decisions now sit inside enterprise boundaries.

Leaders are shifting from “cloud-first” to right-place compute: cloud for model growth, edge for real-time action, and on-prem for trust, auditability, and proprietary knowledge. This is not retreat, it is architectural maturity.

As AI becomes infrastructure, organizations that balance performance with governance will set the benchmark for secure, compliant, and high-assurance intelligence.

Discover how Twendee supports automation-first enterprise AI architectures, and stay connected via Facebook, X, and LinkedIn.