Banking applications operate under pressure that most consumer apps never face. Transaction volume can surge sharply during salary periods, repayment cycles, or large promotional campaigns. Latency problems rarely originate from one slow API. They build up across synchronous workflows, multi-step security checks, and older backend components that cannot scale quickly enough. Even a few hundred milliseconds of slowdown can escalate into failed transactions, customer dissatisfaction, and reputational risk. Improving banking app performance requires engineering depth that spans architecture, APIs, and security operations.

Banking App Performance Challenges During Peak Transactions

High-traffic banking systems slow down because of structural design patterns, not isolated problems.

Financial transaction flows often pass through multiple stages: identity verification, risk scoring, account validation, ledger updates, and user notifications. When these steps run sequentially, latency compounds quickly during peak periods when synchronous APIs handle thousands of concurrent requests.

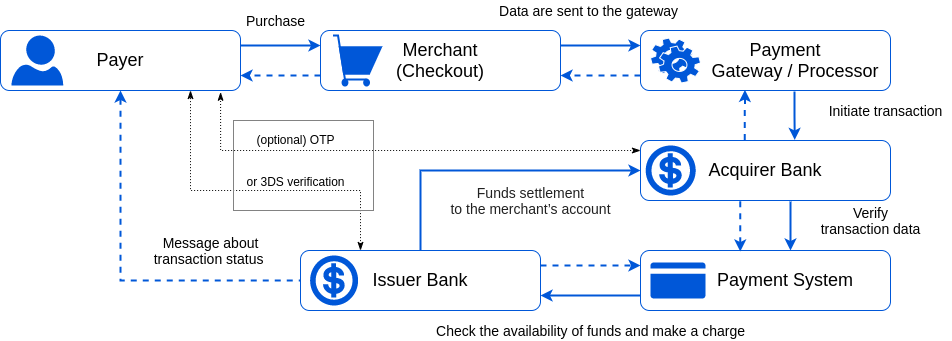

Typical multi-step payment transaction workflow illustrating issuer, acquirer, and gateway interactions. (Source: Corefy)

This multi-node path shows how a single transaction touches multiple systems, each adding delay. The complexity grows when additional layers activate, such as OTP, fraud scoring, AML checks, or 3DS verification. Systems designed around blocking operations struggle the most during salary days, seasonal shopping spikes, or campaign-driven bursts.

Further latency comes from:

Inefficient API design such as heavy payloads and unnecessary round trips

Database bottlenecks caused by repeated queries under load

Security flows that trigger sequential encryption and rule checks

Legacy cores that cannot handle modern transaction volume

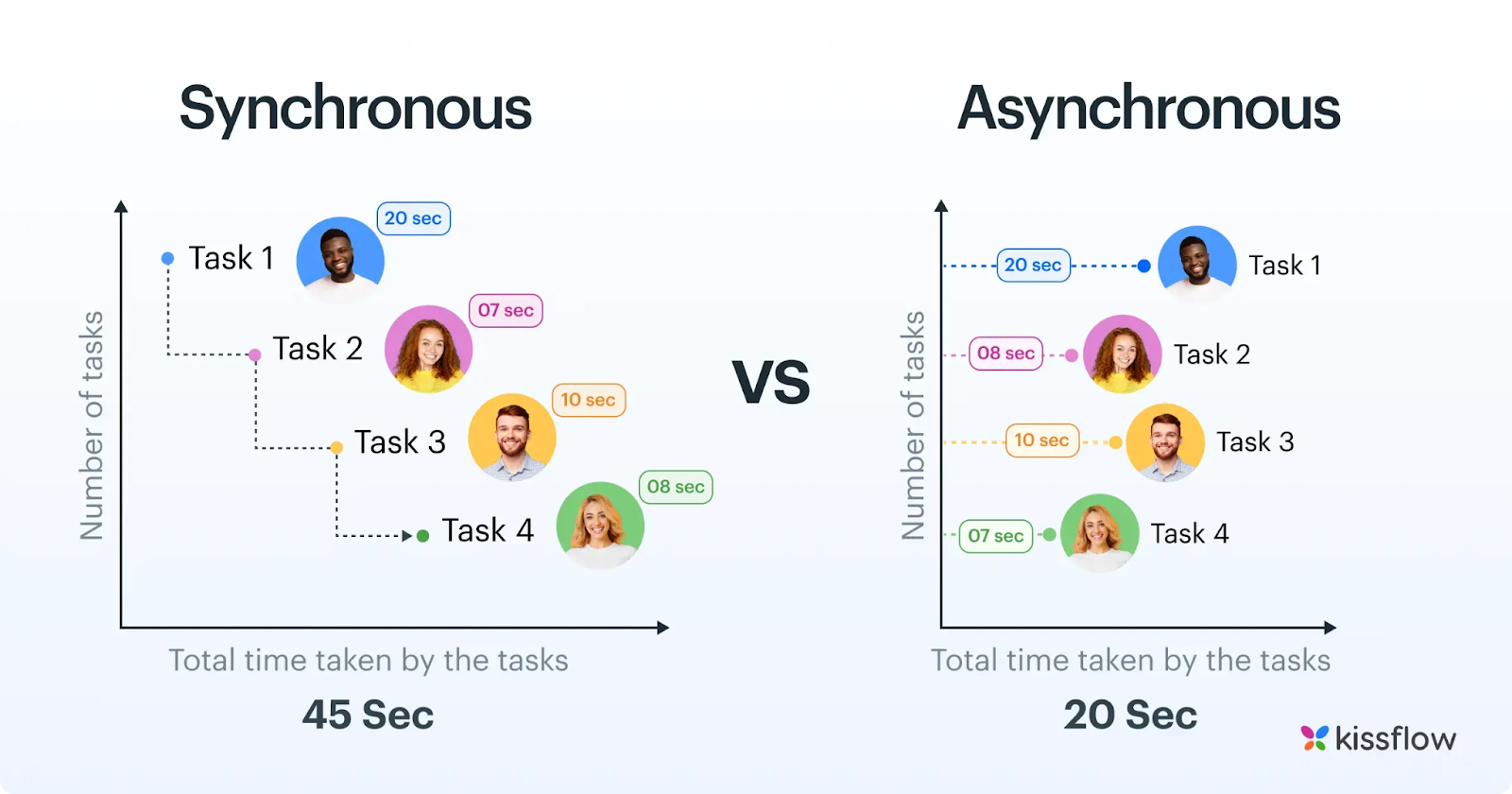

To understand how workflow design affects speed, it helps to compare synchronous and asynchronous processing. Sequential flows force each task to wait for the previous one, turning small delays into system-wide slowdowns. Asynchronous design allows multiple operations to progress independently, reducing the total time significantly.

Synchronous versus asynchronous workflow showing faster parallel processing. (Source: Kissflow)

This difference becomes critical in financial systems where even a few hundred milliseconds matter. Teams with banking experience often redesign these flows into asynchronous or event-driven structures to prevent cascading delays and stabilize performance during peak usage.

Latency is also visible at the user interface layer. Perceived speed depends on UI responsiveness and rendering behavior, which connects with principles discussed in Twendee’s internal guide on improving Core Web Vitals and UX.

Engineering Strategies That Improve Banking App Performance Under Heavy Load

Improving performance in banking systems requires optimizing multiple layers at once. Below are the strategies that consistently deliver meaningful latency reduction during peak transaction periods.

1. Low-latency architecture built for sudden transaction spikes

Banking traffic rarely grows at a steady rate. It arrives in sharp bursts during salary periods, repayment deadlines, or large promotional campaigns, and systems built on synchronous request–response flows struggle because each service must wait for downstream confirmation before responding. This design amplifies latency under pressure. A more resilient approach is event-driven processing, where the application acknowledges the user action instantly while deeper tasks ledger updates, AML scoring, reconciliation continue asynchronously.

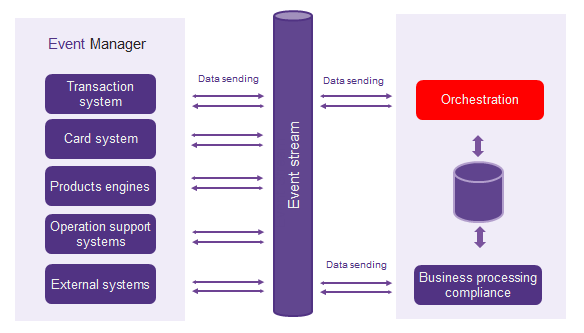

Event-driven architecture for low-latency transaction processing. (Source: BOS)

The visual illustrates how an event stream decouples transaction systems, card systems, product engines, and external services from the orchestration layer. Instead of a long blocking chain, each service sends and receives events independently. This reduces bottlenecks, prevents UI freezing during peak load, and keeps the overall system responsive even when internal processes require more time to complete.

Caching further strengthens this architecture when applied to the right data. High-frequency, low-volatility values such as balances, card status, limits, and beneficiary lists generate most read traffic during busy periods. By caching these items, the system avoids unnecessary queries that would otherwise flood the database. A read–write separation model reinforces this stability: the primary database handles essential ledger writes, while replicas serve the majority of read operations. This prevents read storms from slowing down mission-critical transactions and gives the backend room to breathe when traffic spikes sharply.

Together, these architectural decisions establish a foundation that absorbs irregular, high-volume banking traffic while keeping transaction response times consistent.

2. High-load optimization aligned with real banking behavior

Traffic in banking systems is highly patterned rather than random. Peak workloads repeatedly appear during:

Salary-day mornings

Loan repayment cutoffs

Evening user activity

Holiday and campaign periods

Systems perform better when scaling and load management respond to these patterns proactively. Traditional autoscaling, which relies on CPU or memory thresholds, reacts too slowly for financial workloads. More stable systems scale based on business-sensitive indicators such as authentication latency, fraud queue depth, gateway round-trip time, or the number of pending ledger writes.

Another challenge is the sudden traffic burst caused by marketing pushes or cashback events. Without throttling or burst buffers, thousands of requests can hit the backend at the same millisecond, overwhelming even a well-provisioned cluster. A controlled admission layer ensures that essential operations (transfers, OTP, balance checks) remain responsive while the system moderates non-critical requests.

The result is a system that reacts to real behavior, not superficial infrastructure metrics.

3. API tuning that prevents hidden latency buildup

As transaction volume grows, the cost of each API operation becomes increasingly visible. Banking APIs often trigger a chain of internal calls validation, risk checks, limit calculations, fraud analysis, and ledger updates and any inefficiency in that chain multiplies across thousands of concurrent requests. The strongest improvements come from reducing the number of internal “round trips,” reorganizing the order of checks, and merging steps that do not need to run independently. Teams like Twendee typically begin by mapping the internal call chain end-to-end to identify where small delays accumulate under heavy load.

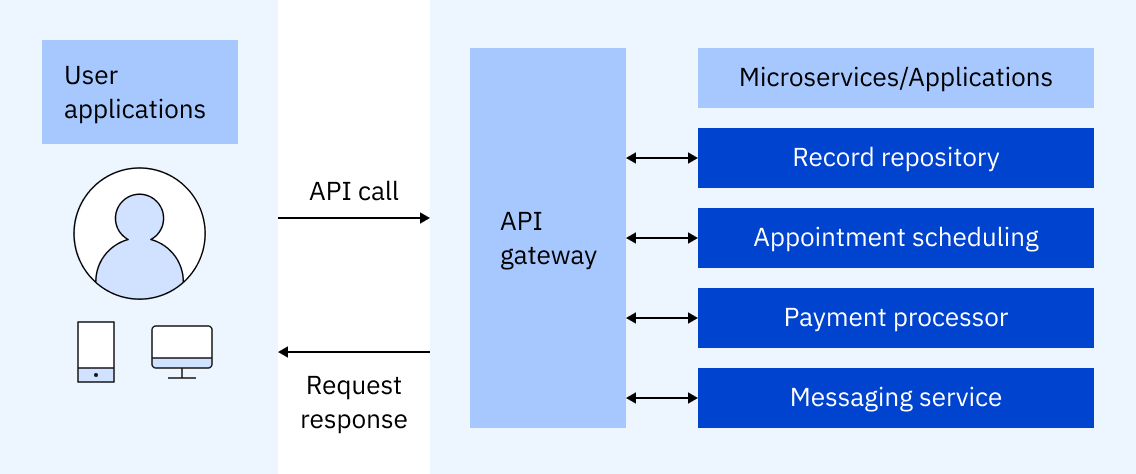

API gateway routing internal microservice calls. (Source: IBM)

A single user request often branches into multiple microservices, and if any component is slow, the overall latency rises even when infrastructure capacity seems sufficient. Slimming payloads provides another high-impact improvement. Many banking APIs return verbose objects or redundant fields, increasing serialization time, network transfer cost, and parsing overhead. Refining payload structure across high-traffic endpoints such as balance checks, transaction lists, and payment confirmations yields immediate performance gains.

Database access is another area where hidden delays surface. Hot paths often share tables with low-frequency operations, slow queries emerge only at scale, and missing indexes become critical during peak activity. By isolating high-frequency read/write paths, optimizing indexing patterns, and restructuring heavy queries, the backend reduces the amount of work required per request which becomes especially important when the system processes thousands of concurrent transactions.

Fine-tuning the API layer removes many of the latency multipliers that only reveal themselves under real financial load, making it one of the most effective levers for improving overall banking app performance.

4. Security engineered for performance without sacrificing compliance

Security tasks such as authentication, device verification, fraud scoring, and AML screening are fundamental to financial systems but under load, they often become the slowest part of the transaction pipeline. Many banks still execute these checks sequentially, which means a single slow component (fraud API, KYC provider, gateway response) can hold up the entire request. In high-traffic environments, this pattern contributes to 20–40% of total latency, based on internal engineering reports from multiple digital banking platforms.

Running these checks in parallel lanes dramatically reduces the total evaluation time. Instead of queuing AML, fraud, and device checks one after another, the system processes them concurrently and aggregates the results at the final decision point. Twendee often sees performance reductions of 120–300 ms on common operations (such as transfers or balance refresh flows) when parallelization is applied correctly.

Modern systems also adopt adaptive security paths that apply heavier scrutiny only where it is genuinely needed. For example:

Low-risk, repetitive behaviors (e.g., checking balances, viewing transaction history) skip deep AML cycles.

Trusted device + known location patterns follow a reduced verification route.

High-value or unusual transactions trigger full fraud scoring, sanctions lists, and enhanced AML checks.

This adaptive model ensures that high-volume, low-risk API calls do not consume the same resources as risk-sensitive operations. Public data from Mastercard and Visa indicates that over 70% of daily consumer app interactions fall into “low-risk repetitive” categories meaning most traffic can be routed through lighter verification paths without compromising compliance.

Token-based authentication further reduces overhead by eliminating repeated session lookups and cryptographic validation for every request. During OTP or login surges moments when banks typically experience 5× to 12× spikes in authentication traffic tokenization keeps the identity system responsive while offloading unnecessary work from backend services.

The result is a security layer that preserves compliance, responds intelligently to risk, and avoids becoming the bottleneck during peak traffic.

Conclusion

Sustaining high performance in modern banking applications requires more than adding servers or increasing throughput. The real gains come from architectural discipline reducing synchronous blocking, minimizing internal call chains, engineering security for speed, and scaling based on real transaction behavior rather than infrastructure metrics alone. When these layers operate together, latency becomes predictable even under irregular, high-volume traffic, and the system maintains stability during salary cycles, repayment windows, or promotional surges. The result is an experience where performance, compliance, and reliability reinforce each other instead of competing.

Discover how Twendee helps leading teams streamline high-load financial systems through performance-driven architectures, and stay connected via LinkedIn and Facebook.