AI has already transformed how credit decisions are made. What used to take days of manual underwriting is now executed in milliseconds through automated scoring models. Yet as AI credit decisioning becomes faster and more complex, a new challenge has emerged, one that accuracy alone cannot solve. Customers, regulators, and internal risk teams are increasingly asking the same question: Why was this decision made?

This is where explainable AI credit risk shifts from a regulatory requirement to a strategic lever. Explainability is no longer only about satisfying auditors or meeting disclosure obligations. It is becoming a decisive factor in customer experience, trust, and long-term viability of AI-driven credit systems.

Explainable AI Credit Risk as a Customer Experience and Trust Lever

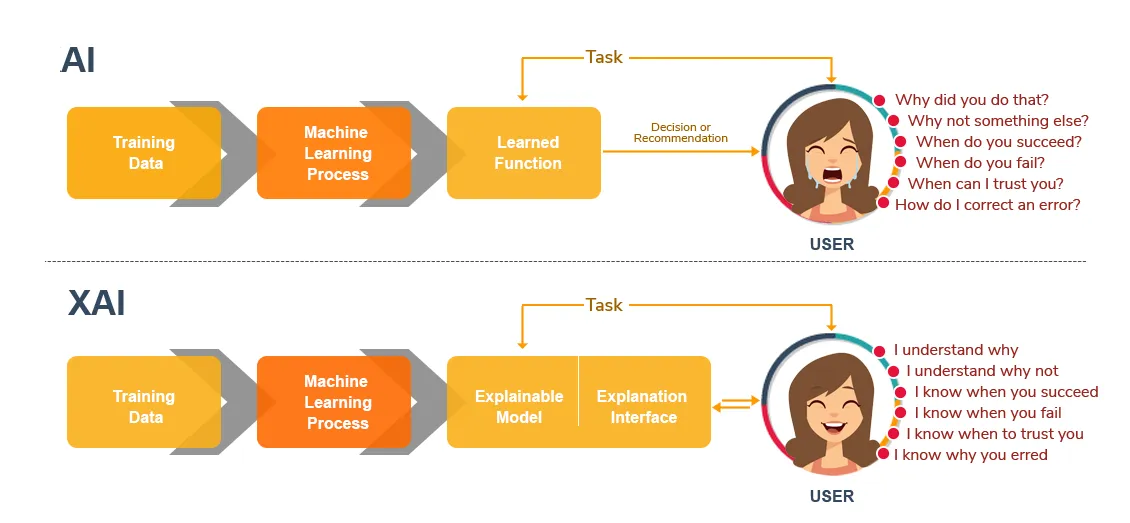

Explainable AI credit risk refers to the design of AI-driven credit decision systems in which the rationale behind approval, rejection, or pricing decisions can be clearly understood, traced, and communicated, both internally to risk, compliance, and governance teams, and externally to customers and regulators. Unlike traditional black-box models that prioritize predictive accuracy alone, explainable AI in credit risk emphasizes decision transparency, feature-level accountability, and reproducible reasoning, making automated credit decisions defensible and trust-building by design.

In credit and risk, customer experience is often discussed in terms of speed, convenience, or approval rates. Yet in AI-driven credit decisioning, the most defining moments of experience occur not when a decision is fast but when it is adverse, unexpected, or difficult to accept. This is precisely where explainable AI credit risk becomes strategically important.

Explainable AI introduces a new layer into the credit experience: the ability for automated decisions to be understood, contextualized, and trusted. Instead of positioning AI as an invisible authority that issues outcomes without dialogue, explainability turns the system into an accountable participant in the decision process. For borrowers, this shift fundamentally changes how credit decisions are perceived—from opaque judgments to reasoned outcomes.

Explainable AI transforms opaque credit decisions into understandable customer experience (source: ifourtechnolab)

This is a subtle but important shift: explainability does not merely “justify” a decision after the fact. Done well, it changes the nature of the interaction.

A rejection becomes a diagnosis rather than a dead end.

A credit limit change becomes a transparent trade-off rather than a surprise.

A pricing adjustment becomes a consistent rationale rather than perceived arbitrariness.

That difference matters because credit decisions are high-stakes moments. The experience is defined by what happens immediately after the decision: confusion, dispute, churn, or continued engagement.

This is also where “trust” becomes measurable. The CFPB’s consumer complaint data shows the scale of friction customers experience across financial products: the CFPB reported receiving ~3.19 million complaints in 2024, and sent ~2.83 million to companies for response. Not every complaint is about AI, but the macro signal is clear: misunderstood outcomes and missing explanations are a recurring source of consumer frustration in finance.

At scale, this effect compounds. As AI credit decisioning expands across digital lending, embedded finance, and real-time risk assessment, institutions are no longer judged only by portfolio performance, but by how trustworthy their automated decisions appear to those affected by them. In this context, explainable AI becomes a trust infrastructure, one that quietly underpins adoption, reduces friction, and enables AI systems to scale without triggering resistance from customers or internal stakeholders.

Seen through this lens, explainable AI credit risk is not an enhancement to existing models. It is a strategic experience layer that aligns automation with human expectations of fairness and reasoned judgment, transforming transparency into a durable competitive advantage in credit and risk ecosystems.

How Explainable Risk Models Reshape Customer Perception in AI Credit Decisioning

Explainable risk models reshape perception through three mechanisms that most “AI transparency” conversations overlook: specificity, consistency, and actionability.

1) Specificity beats generic “reason codes”

In many markets, adverse action notices still rely on standardized reason templates. Regulators are pushing back on that approach when AI is involved. The CFPB has explicitly emphasized that creditors must provide accurate and specific principal reasons for adverse action, and cannot rely on vague or generic explanations that fail to reflect the true drivers of the decision (Source: CFPB Circular 2023-03 PDF). For customer experience, specificity is not only legal hygiene. It determines whether the decision feels legitimate.

2) Consistency prevents “trust drift”

Customers rarely see one decision. They see a pattern: pre-approval messages, follow-up limit updates, different results across channels. If explanations vary across decisions that look similar, customers infer unfairness even when the model is technically correct. Explainability, therefore, needs product-level discipline: standardized explanation language, stable factor hierarchies, and clear boundaries for what can and cannot be said.

3) Actionability converts frustration into retention

The highest leverage form of explanation is one that helps a borrower understand what can change the outcome. In practice, this is where counterfactual explanations and policy-linked guidance outperform static feature attribution. A customer does not need SHAP charts. They need a credible pathway forward.

This is why the best explainability programs are not “model documentation projects.” They are experience design projects embedded in AI credit decisioning.

Why Explainability Is Central to Trust at Scale

Fair lending is often framed as a compliance obligation. But at scale, it is also a trust obligation. When AI models incorporate alternative data, the system can become unintentionally sensitive to proxy variables that correlate with protected characteristics.

Explainability is what makes fairness operable:

It helps detect proxy influence and unexpected drivers early

It supports internal challenge processes and model governance

It creates defensible narratives for regulators and customers

The NIST AI Risk Management Framework explicitly names “explainable and interpretable” and “fair with harmful bias managed” as core characteristics of trustworthy AI systems (Source: NIST AI RMF 1.0 PDF). That pairing is not accidental: fairness without explainability is hard to demonstrate, and explainability without fairness quickly becomes reputational risk.

In Europe, the European Banking Authority has also discussed practical obstacles in using ML for credit risk under regulatory frameworks including GDPR and the EU AI Act, which reinforces why explainability and traceability become decisive implementation constraints for lenders (Source: EBA Follow-up Report PDF).

The implication for leaders is straightforward: if fair lending AI is a non-negotiable expectation, then explainability is not optional plumbing. It is the system capability that makes fairness provable.

From Black Box to Explainable Risk Models: What Changes Architecturally

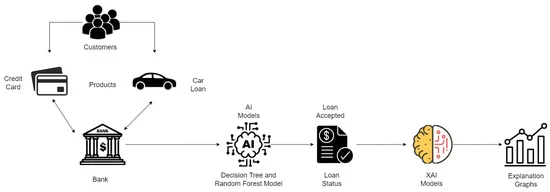

Explainability fails when it is bolted on. In real credit environments, it must be engineered across the decision lifecycle: data → model → decision → disclosure → audit.

Explainability functions as a dedicated layer across the credit decision lifecycle. (source: mdpi)

A practical architecture for explainable AI credit risk typically includes:

Decisioning layer (rules + model outputs): separates policy constraints from prediction

Explanation layer: generates stable “why” narratives tied to factors that can be defended

Evidence layer: retains decision context, feature values, and data lineage for audit

Monitoring layer: tracks explanation stability, drift, and fairness metrics over time

This aligns with long-standing model risk management expectations. In the US, SR 11-7 emphasizes governance, validation, and controls across model development and use (Source: Federal Reserve SR 11-7). Explainability is where these governance principles meet customer-facing outcomes.

A useful way to test architectural maturity is to ask: can the organization reconstruct a credit decision months later and explain it consistently to three audiences?

internal risk and model validation

compliance and regulators

the customer receiving the outcome

If the answer is “no,” the system is operating as a black box, even if an explanation dashboard exists.

Turning Explainability Into Measurable Business Outcomes

Explainability becomes a “lever” only when it changes business outcomes, not when it improves documentation. The most measurable impacts tend to cluster in three areas:

Lower dispute and complaint load, because outcomes are understood sooner

Faster internal overrides and exception handling, because decision drivers are clearer

Shorter audit cycles and higher governance confidence, because evidence is reconstructable

Deloitte’s work on explainable AI in banking frames XAI as a way to improve transparency and trust while strengthening governance, especially as regulators expect outcomes to be “reasonably understood” by bank staff (Source: deloitte).

On the supervisory side, BIS research has noted that AI explainability is a leading issue raised by financial institutions when engaging with regulators/supervisors, which effectively makes explainability a practical constraint on speed-to-deploy in regulated environments (Source: BIS FSI paper PDF). In other words: even if a model is accurate, explainability can determine whether it ships, scales, and survives scrutiny.

Explainable AI credit risk is not solved by choosing a single interpretability method. It is solved by designing a decision system that is coherent across product, policy, engineering, and governance. This is the level at which Twendee typically operates working with product, risk, and engineering teams to design AI credit decisioning systems where explainability is embedded across workflows, governance, and customer touchpoints, enabling transparency to scale without compromising performance or control. Twendee supports lenders and fintech platforms by implementing AI decision systems that:

Generate clear, consistent explanations for internal stakeholders and end customers

Preserve decision traceability and evidence retention aligned with model risk expectations

Embed explainability into AI credit decisioning workflows so transparency becomes part of the experience, not an afterthought

Support fairness assessments and operational controls needed for fair lending AI programs

The goal is not to make models “more explainable” in isolation. The goal is to make credit decisions defensible, auditable, and trust-building as the system scales.

Conclusion

As AI increasingly determines who gets access to credit, on what terms, and at what cost, the real differentiator is no longer model accuracy alone. It is whether those decisions can be understood, trusted, and defended by regulators, internal teams, and customers alike. Explainable AI credit risk has quietly become the trust infrastructure behind modern lending, shaping how borrowers perceive fairness, accountability, and confidence in automated decisions. Institutions that treat explainability as a core experience layer not a post-hoc compliance feature will be better positioned to scale AI credit decisioning without sacrificing trust or resilience.

For organizations designing AI-driven credit and risk platforms, Twendee works alongside product, risk, and engineering teams to architect explainable decision systems where transparency, governance, and customer trust are built in from the start. Stay connected with us via Facebook, X, and LinkedIn.

Read our latest blog here: Common Architecture Mistakes in Growing Web2 Platforms