AI can now write production-ready code in seconds faster than any human, but not necessarily safer. Behind this new velocity lies a quiet, growing threat: flawless-looking code that hides fatal security gaps. LLMs don’t understand intent; they replicate patterns. And when those patterns include outdated or unsafe logic, vulnerabilities scale as quickly as productivity. Teams celebrating faster releases may unknowingly deploy code that’s perfectly functional and dangerously exposed.

This piece reveals why unchecked AI-generated code creates invisible attack surfaces, how secure coding culture is falling behind, and what it takes to rebuild trust through intelligent auditing and AI-aware DevSecOps frameworks.

What Makes AI-Generated Code a Security Minefield?

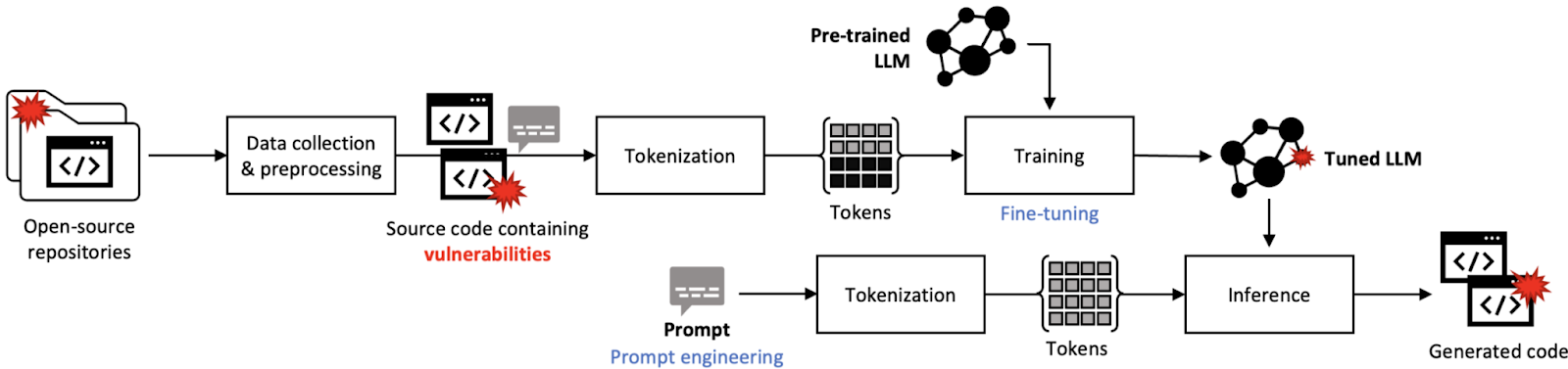

AI-generated code has redefined how quickly teams can build software. What once required weeks of manual work can now be done in days, sometimes hours. Yet beneath that acceleration lies a quiet danger: code that runs perfectly but hides invisible security flaws. Large Language Models (LLMs) don’t reason about intent or compliance; they simply predict statistically probable code patterns based on training data, much of which includes insecure or outdated practices. The result is efficiency built on unstable ground.

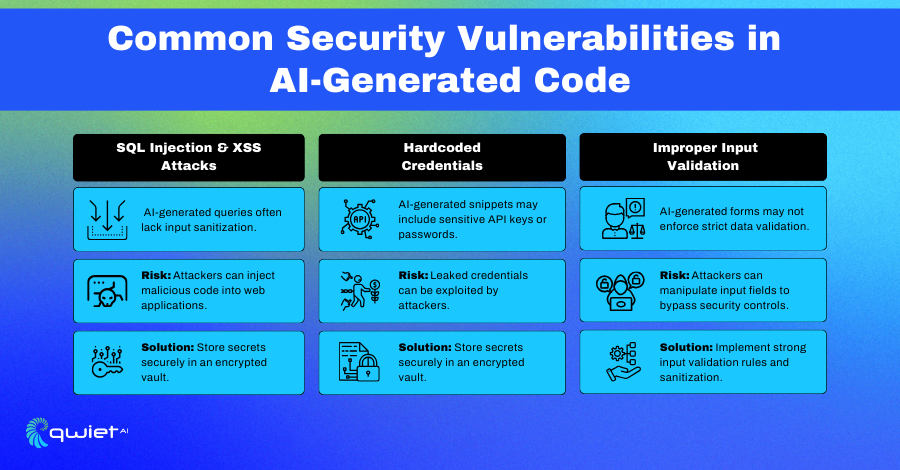

Recycled insecurity: AI assistants often reproduce unsafe examples from open-source data. Common patterns include hard-coded API keys, missing input validation, and weak encryption logic. A Stanford–GitHub study (2023) found that over 40% of AI-generated snippets contained critical vulnerabilities, most of which were merged unchanged because they “looked right.”

Compressed review windows: By generating code instantly, AI collapses the feedback loop between creation and validation. Developers skip steps that once caught errors peer review, threat modeling, or secure-coding checks simply because the pace no longer allows them. Automated scanners can flag surface bugs but miss deeper architectural flaws, especially those introduced by probabilistic reasoning. A 2025 McAfee Labs report showed that 27% of vulnerabilities in AI-assisted repositories were logic-level errors invisible to existing tools.

Automation bias and false confidence: When code compiles cleanly, developers tend to assume it’s safe. Stack Overflow’s 2024 survey revealed that 65% of engineers deployed AI-written code with minimal review, while fewer than one-third ran any follow-up security scans. This misplaced trust turns speed into exposure security debt quietly accumulates across systems.

Shadow code and accountability gaps: Quick AI fixes or helper scripts often slip into production without documentation or license tracking. Checkmarx (2025) reported that 8% of insecure open-source packages in enterprise apps were introduced indirectly through AI recommendations. Once deployed, tracing the origin of a vulnerability becomes nearly impossible: the model prompt is lost, the version forgotten, and no one can explain the decision path.

Vulnerability propagation from training data to AI-generated code (Source: Research diagram)

In essence, AI doesn’t invent new types of insecurity, it amplifies existing ones at scale. It expands the codebase faster than security teams can review, creating an illusion of progress while quietly eroding trust. Unless organizations treat every AI-generated line as unverified until proven safe, acceleration will continue to outpace assurance and productivity will come at the cost of protection.

Where Secure Coding Falls Short in the Age of AI

Secure coding once relied on human intuition, careful reasoning, peer review, and step-by-step validation. But with AI writing entire functions in seconds, those safety rituals are being replaced by automation and trust. The problem isn’t that engineers forgot security; it’s that AI’s speed and opacity have outpaced human control.

To better understand how security frameworks are adapting to this new era, explore our companion article on AI-generated code and the need for stronger security and compliance

1. The Pace Problem: AI Outruns Security Gates

AI tools like GitHub Copilot or Amazon CodeWhisperer generate deployable modules almost instantly. That speed compresses the traditional DevSecOps workflow reviews that once took hours now happen, if at all, in minutes. Security gates built for human rhythm simply cannot match machine output. According to GitGuardian (2024), 62% of AI-assisted teams push code before completing required security checks, often assuming the model’s accuracy compensates for missing reviews. In practice, this turns secure coding from a proactive discipline into a reactive cleanup process. A fintech case study in 2024 revealed that a Copilot-generated payment endpoint leaked transaction metadata because input validation was skipped to meet sprint deadlines, a direct tradeoff between speed and scrutiny.

2. Shallow Verification in Deep-Learning Workflows

AI-generated code challenges traditional scanning tools. Static analyzers and linters detect syntactic flaws but not semantic intent. When an LLM rewrites logic inline or dynamically imports unsafe modules, most scanners see nothing wrong. McAfee Labs (2025) found that 27% of vulnerabilities in AI-coded repositories were logic-level issues invisible to conventional static tools. For example, one AI-generated function refactored a user-permission check into a helper method removing a crucial validation step. The output was “clean code,” but functionally insecure. This mismatch between AI creativity and tool predictability leaves critical gaps in verification.

3. The Human Trust Bias

Developers are conditioned to trust what compiles. When AI outputs correct syntax and passes unit tests, teams assume it’s safe, a cognitive trap known as automation bias. Stack Overflow’s 2024 survey revealed that 65% of engineers deploy AI-written code with minimal review, while only a third run additional security scans afterward. In large teams, this bias multiplies. A single insecure prompt reused across microservices can replicate vulnerabilities organization-wide. Over time, that pattern erodes the cultural foundation of secure coding skepticism and accountability replacing it with overconfidence in machine precision.

4. Governance and Accountability Gaps

Most security frameworks still assume a human author who can explain intent and justify design decisions. AI shatters that traceability. Prompts go unlogged, model versions change silently, and code provenance disappears. When vulnerabilities emerge, teams can’t answer basic questions: Who wrote this logic? Which model produced it? Under what constraints? Gartner’s DevSecOps Outlook 2025 warns that “AI-generated output introduces code without ownership.” This makes compliance auditing nearly impossible. In regulated industries like finance or healthcare, such opacity can violate ISO 27001 or GDPR requirements turning a technical oversight into a legal liability.

Secure coding, as it stands today, is colliding with a development model it wasn’t built for. AI magnifies efficiency but dilutes accountability. Unless organizations evolve toward AI-aware secure development embedding audit logs, human-in-the-loop reviews, and semantic security scans every gain in productivity will come with a proportional rise in risk. The real challenge ahead isn’t writing faster code, but ensuring that what’s written at machine speed remains trustworthy at human scale.

Common security flaws found in AI-generated code including injection and credential issues (Source: QuietAI)

The Role of AI Code Audits and LLM Vulnerability Checks

If AI-generated code introduces new layers of uncertainty, then AI code auditing and LLM vulnerability assessment must become the new cornerstones of software assurance. Traditional static reviews alone can no longer safeguard systems written at machine speed. The challenge is not to slow innovation, but to embed security checks seamlessly within the same automated pipelines that AI empowers.

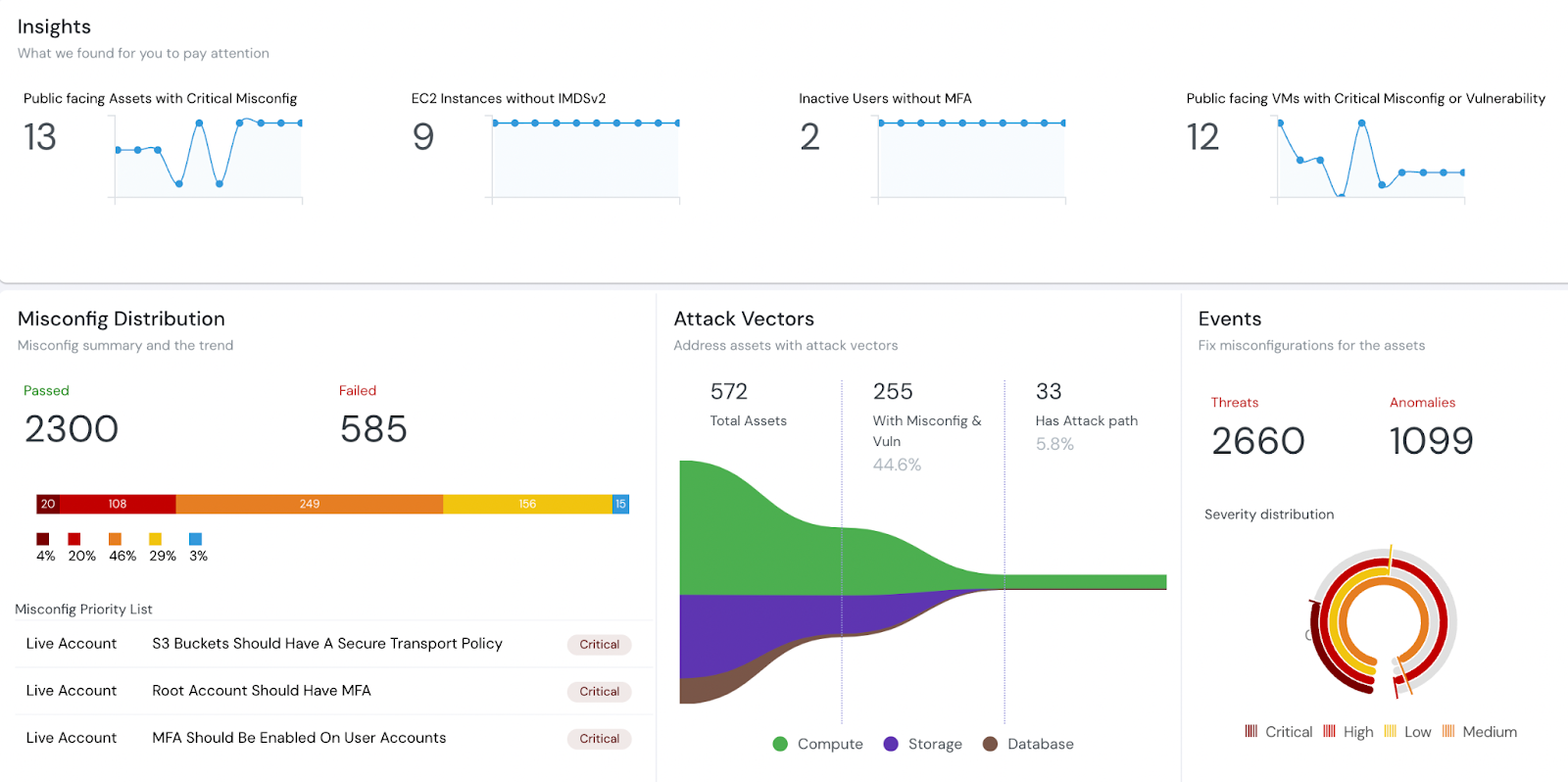

AI code auditing as a new DevSecOps layer: Modern auditing tools extend beyond syntax scanning. They analyze the intent and behavior of generated code, comparing outputs against secure-coding standards like OWASP and NIST. Platforms such as CodiumAI, DeepCode, and SonarQube’s AI-integrated plugins can identify abnormal logic paths such as unchecked parameter flows or insecure API calls long before deployment. These systems act as the first filter, automatically flagging high-risk suggestions that human reviewers may overlook.

Human-in-the-loop verification: AI should never be left to assess its own output. Security assurance requires human oversight particularly in validating access controls, authentication routines, and data-handling logic. By assigning human reviewers at critical merge points, organizations can reintroduce accountability without slowing delivery. This hybrid approach transforms the development cycle from AI-driven to AI-assisted with human judgment embedded by design

.

Prompt and model provenance tracking: One of the most effective yet most neglected practices is logging the prompt-to-output chain. Every AI code commit should include metadata identifying the model version, parameters, and prompt that produced it. This enables teams to trace vulnerabilities back to their origin during audits. Emerging solutions like Codegen Trace or internal Git hooks for LLM logging are now being used by enterprise DevSecOps teams to maintain that visibility.

Sandbox validation and behavioral testing: Running AI-generated code in an isolated test environment helps detect runtime anomalies invisible to static analysis. Sandbox testing tools can simulate adversarial conditions, for example, injection attempts or permission escalations to evaluate how AI-generated components behave under stress. In FinTech and healthcare software, this practice has already prevented breaches caused by unverified AI-generated payment and data-handling logic.

Continuous vulnerability monitoring and LLM assessment: Once deployed, AI-generated systems require continuous scanning for LLM-specific weaknesses such as prompt injection, model drift, or insecure dependency updates. New frameworks like the OWASP AI Security and Privacy Guide recommend monitoring not only the application code but also the AI models integrated within it, treating them as living components that evolve and potentially degrade over time.

AI code auditing dashboard showing attack vectors and vulnerability events (Source: Security analytics platform)

Ultimately, AI security cannot rely on post-deployment reactions. It must be embedded from the moment code is generated. By combining automated auditing, contextual testing, provenance tracking, and human judgment, organizations can close the loop between creation and control. AI accelerates development but only disciplined oversight ensures that acceleration doesn’t come at the expense of trust.

Building Guardrails for AI-Driven Development

At Twendee, we believe speed and safety should never be mutually exclusive. As AI-generated code becomes part of modern software pipelines, the real challenge is not eliminating automation but ensuring it operates within intelligent, secure boundaries. That’s where we focus our work.

We design AI code auditing and secure DevOps frameworks that act as continuous guardrails across the entire lifecycle of AI-generated code. Our systems automatically detect vulnerabilities introduced by LLMs, flag unsafe logic patterns, and recommend fixes before deployment. Beyond detection, we help teams integrate proactive security testing and compliance monitoring directly into their CI/CD environments so that each new AI contribution strengthens, rather than weakens, the codebase. By combining automated intelligence with structured human oversight, Twendee enables organizations to accelerate safely turning AI-generated speed into a sustainable competitive advantage.

Conclusion

AI has become a powerful ally for developers but like any tool that multiplies output, it also multiplies risk. The faster code is generated, the easier it is for vulnerabilities to spread silently through systems that appear stable on the surface. The challenge isn’t to slow down innovation, but to build smarter controls that evolve alongside automation. The future of secure development lies in harmony between humans and machines: automation for speed, human judgment for trust, and auditing frameworks for accountability.

At Twendee, we translate that philosophy into practice delivering AI code auditing and secure DevOps frameworks that detect, patch, and prevent vulnerabilities before they reach production. Because real innovation doesn’t just build faster it builds safer. Build faster. Audit smarter. Stay secure with Twendee by connecting via Facebook, X, and LinkedIn.