The way software is built in 2025 has changed more than most teams realize. These changes now dictate the cost, speed, and stability of every product, not just its features. In a landscape where one wrong architectural choice can slow a company down for years, adopting the right development trends is no longer optional. Below are the five software development trends that every business must adapt to in 2025 if they want to build faster, scale smarter, and avoid expensive technical detours.

1. AI-Assisted Development

AI-assisted development has shifted from a useful enhancement to a core productivity engine for software teams in 2025. Instead of simply suggesting lines of code, modern AI copilots now support the entire development lifecycle, removing bottlenecks and enabling engineers to focus on higher-value work. This shift is not just about automation, it is about redefining how engineering capacity is created, allocated, and scaled.

AI now contributes at every point where teams traditionally slow down: generating boilerplate code, drafting integration logic, creating tests, reviewing patterns, documenting APIs, and even highlighting potential architectural issues before they become real defects. These capabilities change the economics of engineering. Time that was once spent on repetitive tasks can be redirected to system design, performance tuning, and product-critical decisions.

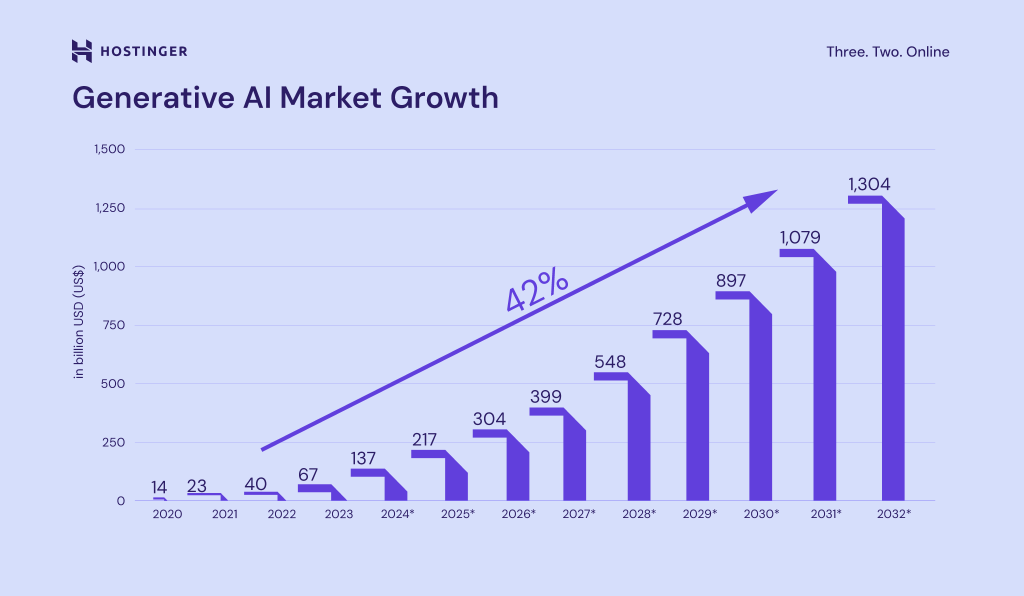

Generative AI market growth acceleration (Source: Hostinger)

Why this matters strategically

The advantage compounds quickly. Teams that adopt AI effectively gain a steady velocity uplift often the equivalent of an extra sprint every quarter simply because engineers spend less time on routine work. Codebases also become more consistent as AI enforces standards across contributors. QA cycles shorten when tests are produced automatically. And senior engineers are no longer consumed by low-impact fixes, freeing them to focus on the work that actually moves the product forward.

The hidden risks leaders need to understand

The benefits are clear, but AI introduces new failure patterns if used without structure. Models can generate logic that appears correct but breaks in edge cases. They may recommend outdated or insecure dependencies. Different teams using different AI tools can produce inconsistent code styles. And without governance around prompts, data, and review processes, organizations risk embedding technical debt faster than ever. For teams navigating these data and security challenges, this guide is a helpful starting point: How to Build Production-Ready AI Features Without Leaking Customer Data

Successful organizations adopt AI in stages, not all at once:

Stage 1: Assisted Coding: AI helps with suggestions, refactoring, small test cases. Humans remain firmly in control.

Stage 2: AI-Augmented Development: AI generates full test suites, performs structured code reviews, drafts documentation, detects vulnerabilities, and supports migrations.

Stage 3: AI-Orchestrated Delivery: AI begins shaping architecture patterns, optimizing pipelines, predicting incidents, and automating environment provisioning.

Most teams in 2025 operate between Stage 1 and Stage 2. Moving to Stage 3 too quickly without clear standards can compromise architecture and long-term maintainability.

AI-assisted development is not a competitive edge anymore, it is the baseline. What differentiates leading companies is how thoughtfully they integrate AI:how they govern it, how they maintain consistency, and how they ensure that increased speed does not come at the cost of reliability.

2. Low-Code Adoption

Low-code adoption is no longer framed as a tool for non-technical teams. In 2025, it has become a strategic lever that allows businesses to ship faster, reduce development overhead, and validate ideas before committing to full-scale engineering effort. Rather than replacing developers, low-code frees them from the operational burden of building routine interfaces, internal dashboards, workflow automation, or proof-of-concept features that do not require heavy engineering logic.

Why low-code matters strategically

The real strategic value of low-code is not speed alone, it is risk reduction. By validating products earlier and automating internal processes without extensive engineering, companies avoid over-investing in ideas that may not scale or features that will be replaced later. Low-code also reduces dependency on senior developers for routine tasks, allowing teams to redirect their expertise toward architecture, scalability, and core product innovation. This shift is especially meaningful in sectors where market timing determines competitiveness. Faster MVP cycles, quicker internal automation, and reduced backlog pressure all translate into stronger engineering velocity without proportional cost increases.

The risks leaders must anticipate

Despite its advantages, low-code can introduce structural risks if deployed without discipline. Teams may build critical logic on platforms that were never designed for high-throughput workloads. Shadow IT can emerge when departments create ungoverned workflows outside the engineering team’s visibility. And as applications grow, low-code-built components can become harder to maintain or integrate due to platform limitations. There is also the risk of overestimating what low-code can handle. Not every system benefits from visual development. Performance-sensitive applications, real-time features, and complex domain logic often require traditional engineering to maintain control, security, and long-term scalability.

Organizations that extract real value from low-code often follow a progressive adoption model:

Stage 1: Tactical Automation: Teams use low-code for internal workflows, reporting dashboards, and lightweight tools that reduce operational friction.

Stage 2: Rapid MVP and Prototyping: Low-code becomes a controlled environment for testing product ideas, building early-stage modules, or launching limited-scope customer-facing features.

Stage 3: Hybrid Architecture: Low-code integrates with core backend services, enabling non-critical modules to evolve on low-code while engineers build performance-critical components in code.

Low-code is not about “building apps without developers.” It is about building the right things faster, preserving engineering bandwidth for the problems that truly require engineering depth. Companies that use low-code intentionally gain a material advantage in learning speed, development cost, and roadmap flexibility while those who treat it as a shortcut often face platform lock-in or technical limitations later.

3. Cloud-Native Apps

Cloud-native architecture has moved from a forward-looking choice to the default foundation for modern software in 2025. As applications grow more modular, data-heavy, and integration-dependent, businesses can no longer rely on monolithic setups that slow down iteration and inflate operational cost. Cloud-native enables teams to build systems that are flexible by design: scalable, resilient, and capable of evolving without rewriting the entire product.

A cloud-native foundation gives companies something that traditional architectures rarely achieve: the ability to scale exactly the part of the system that needs it, at the moment it needs it. This precision translates directly into business outcomes.

Costs become more predictable because infrastructure expands and contracts with demand.

Teams innovate faster because services are loosely coupled and can be updated independently.

Reliability improves because failures in one module no longer bring down the entire platform.

Cloud-native turns infrastructure into a competitive advantage rather than a cost center especially for businesses operating across multiple markets, devices, or user segments.

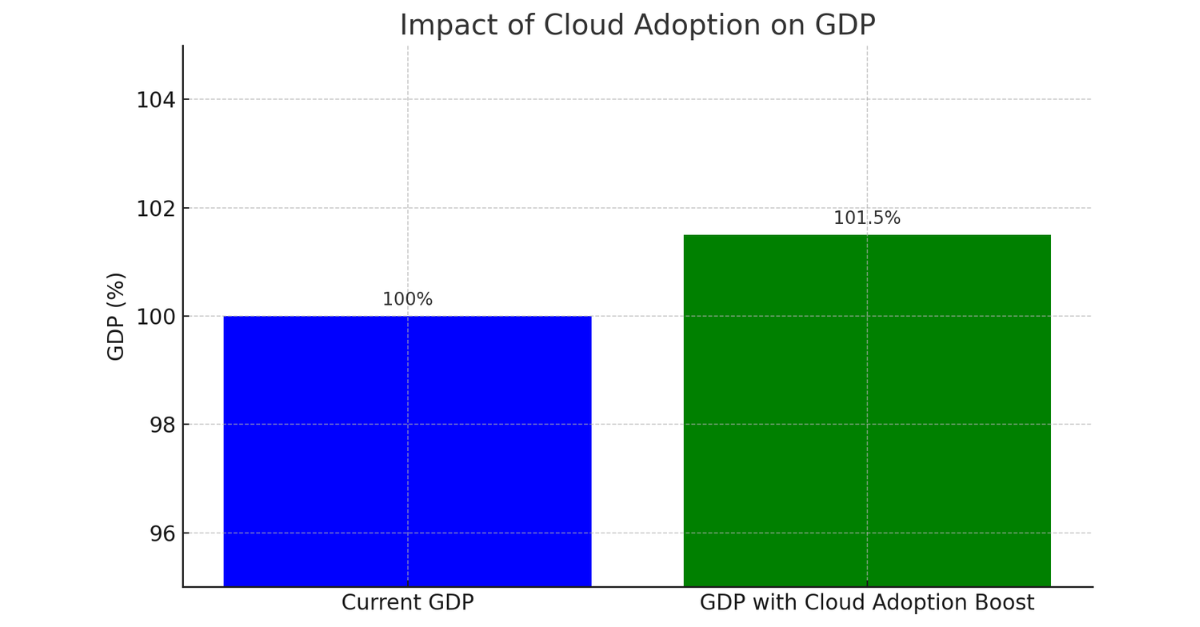

Economic impact of cloud adoption (Source: Report Analysis)

The risks leaders need to understand

The shift to cloud-native brings benefits but also introduces complexity if adopted prematurely or without proper governance.

Teams may adopt microservices too early, fragmenting the codebase and creating operational overhead they are not ready to manage.

Misconfigured autoscaling or service meshes can unintentionally increase cloud cost rather than reduce it.

A purely cloud-native mindset without guardrails can lead to tool sprawl—different teams using different stacks, making maintenance more difficult.

And for MVP-stage products, the architectural overhead of cloud-native can outweigh the benefits entirely.

Many companies fail because they pursue cloud-native as a “checklist item,” not as a response to real scaling or reliability demands. Organizations that transition successfully typically evolve through a staged approach:

Stage 1: Containerization for Stability: Applications migrate from monoliths to containerized environments, improving deployment reliability without full microservice fragmentation.

Stage 2: Modularization with Intent: Services are split only where it creates real business or engineering value such as separating billing, authentication, or data pipelines to reduce risk and improve maintainability.

Stage 3: Full Cloud-Native Orchestration: Automated scaling, service mesh governance, observability pipelines, and multi-cluster management become part of the operating model.

Cloud-native isn’t a modernization trend, it’s the architectural baseline for building software that can evolve in an unpredictable market. The real advantage does not come from using microservices or Kubernetes alone, but from adopting cloud-native intentionally, at the pace that matches product growth and organizational capability. When done right, it creates software that scales faster, breaks less, and adapts more easily to new business needs.

4. DevSecOps

By 2025, DevSecOps has shifted from a best practice to a non-negotiable foundation for modern software. With cyberattacks growing automated and regulatory pressure rising, security can no longer be treated as a final checkpoint. It must be built directly into the development lifecycle just like testing or CI/CD. DevSecOps changes how organizations manage risk. Instead of discovering vulnerabilities late, security moves upstream into code decisions, dependency choices, infrastructure setup, and deployment pipelines. The result is software that ships faster, with fewer surprises and significantly lower exposure to failures. DevSecOps gives companies structural advantages that traditional security models cannot offer:

Releases become more predictable because issues are caught early rather than discovered late in QA or after deployment.

Compliance becomes manageable, not reactive, essential for sectors preparing to scale across borders.

Infrastructure becomes more resilient, as misconfigurations, insecure containers, and weak IAM settings are flagged automatically.

The cost of incidents decreases sharply, because vulnerabilities are addressed before they accumulate into systemic risk.

In a landscape where one security incident can halt an entire growth cycle, DevSecOps shifts organizations from crisis management to controlled stability.

Where companies go wrong

The risk is not in adopting DevSecOps, it's in adopting it poorly. Many teams introduce scanning tools without redesigning processes, turning security into noise instead of clarity. Others distribute responsibility so widely that no one owns it. And some assume passing automated checks equals safety, even when core architectural patterns remain vulnerable. These pitfalls explain why DevSecOps frequently fails to deliver value for organizations that treat it as another tool, not a discipline.

A realistic path to DevSecOps maturity

Successful teams take DevSecOps step by step:

Stage 1: Shift-Left Security: Basic scanning (SAST/DAST), dependency checks, secret detection, and container scanning are integrated early into pipelines.

Stage 2: Secure-by-Default Automation: Infrastructure as code validation, automated policy enforcement, and pipeline-level access control reduce human error.

Stage 3: Continuous Security Intelligence: Real-time threat detection, behavioral monitoring, automated incident response, and AI-driven vulnerability triage become operational standards.

DevSecOps is not about slowing teams down with security steps, it is about building pipelines where speed and security support each other. Companies that treat DevSecOps as a strategic enabler, not a compliance checklist, gain stability, reduce risk, and release software that scales safely in unpredictable markets.

5. Automation in Software Delivery

End-to-end automation has become the backbone of modern engineering in 2025. As products grow more modular and release cycles accelerate, teams can no longer rely on manual testing, environment setup, or deployment orchestration. Automation now connects the entire development lifecycle from code commit to monitoring in production creating a continuous, predictable, and resilient delivery pipeline. This shift is not just about efficiency. It changes how organizations think about engineering capacity. Automation handles the repetitive work so teams can focus on architecture, design, performance optimization, and the product decisions that meaningfully move the business forward. Automation delivers advantages that compound over time:

releases become faster because CI/CD pipelines run without manual intervention,

quality improves when testing is automated at multiple layers,

and outages decrease because infrastructure can detect issues and recover on its own.

The result is a development environment where teams ship more confidently, with fewer bottlenecks, and with a level of consistency impossible to achieve manually. Despite its value, automation can become counterproductive when implemented without discipline. Over-automating early can create fragile pipelines. Poorly designed scripts can hide critical issues or fail silently. And tool sprawl teams adopting multiple overlapping automation tools can make maintenance as complicated as manual processes. The goal isn’t to automate everything. It’s to automate the right things: the tasks that slow teams down, introduce human error, or offer no strategic benefit when done manually.

A practical pathway to automation maturity

Organizations that succeed with automation usually follow three steps:

Stage 1: Pipeline Automation: Reliable CI/CD, automated builds, and fundamental test coverage.

Stage 2: Testing & Environment Automation: Automated regression testing, environment provisioning, and repeatable staging workflows.

Stage 3: Autonomous Operations: AI-assisted monitoring, auto-scaling, self-healing infrastructure, and automated rollback mechanisms.

Automation is no longer a supporting tool, it is the engine that maintains engineering velocity. Teams that invest intentionally in automation achieve a development rhythm that is faster, more reliable, and far more scalable. Those that rely on manual workflows fall behind, not because they lack talent, but because their systems cannot keep pace with the speed of modern software.

Conclusion

2025 is no longer a year of incremental upgrades. It is a year where engineering choices directly shape a company’s ability to move fast, control costs, and compete sustainably. The businesses that win will be those that adopt these trends deliberately choosing what fits their product, avoiding what adds unnecessary complexity, and executing with enough discipline to prevent technical debt from accumulating.

This is also where the role of a trusted technology partner becomes critical. Twendee supports companies in making these decisions with clarity: advising on the right architectural direction, identifying which trends create real ROI for each stage of growth, and providing the engineering teams needed to implement them properly. The goal is not to adopt every trend, it is to adopt the ones that truly strengthen your product and competitive position.

If your team is evaluating how these 2025 development trends fit into your roadmap, Twendee can help through technology consulting, architectural reviews, or hands-on engineering execution. Explore how Twendee enables smarter, faster development at :LinkedIn, X, and Facebook